Enterprises rightly fear AI hallucinations. But a quieter, more pervasive threat is source error: agents confidently citing the wrong yet plausible document, like a draft contract or last year’s price list. Preventing this takes more than prompts and metadata tagging. It requires giving AI the ability to pause and think — using chain of thought reasoning — coupled with version-aware DMS. The following article explains how to differentiate between AI that sounds smart and AI you can trust.

In the current enterprise settings, AI agents and assistants do so much more than just respond to human requests. Some agents act as gateways to mission-critical information. At times, semi-autonomous AI agents can even make decisions based on business content.

Yet even the most advanced AI systems sometimes deliver wrong responses. That does not happen because LLMs invent data, but because they use the wrong documents, even though they seem plausibly valid.

What makes ‘Source Error’ more problematic than hallucinations?

Most enterprises are wary about hallucinations – and take them seriously. Accordingly, they instruct agents to only answer from retrieved enterprise content, not general model memory. But there’s a subtler – and serious – risk lurking in company databases and knowledge foundations:

- A salesperson asks: “What is the current list price for Product X?” The agent finds five spreadsheets, all labeled “price list,” and picks the one from last year.

- A procurement officer asks: “What are the payment terms for Supplier Y?” The agent retrieves both a draft contract and the signed contract — and unknowingly returns the draft.

In both cases, the bot is not hallucinating. It’s choosing between plausible but conflicting sources – and (at least) one of them must be outdated or unvalidated, a phenomenon also known as ‘AI version drift’.

This happens because LLMs are trained to generate answers regardless of uncertainty — a trait that makes errors in enterprise contexts risky. Consider the governance and compliance challenges, the potential impact on the business, and customer satisfaction.

Or all of these risks combined!

This may sound like a nightmare scenario. But the danger is very real-world: your organization’s AI might confidently, wrongly, and quite innocently lead employees down multiple wrong paths.

Metadata and prompts: Must-haves that aren’t enough

To mitigate these errors, organizations typically deploy a new generation Document Management System (DMS) that adds two layers of defense to enterprise AI.

- Prompt constraints, ensuring the AI confines itself to the retrieved files.

- Version metadata and tags, marking files as “approved,” “final,” or “draft,” to guide ranking.

These are essential guardrails — yet they don’t fully address the problem of ambiguity. When multiple legitimate documents qualify, the system still needs a way to evaluate which one to trust.

This is where Chain of Thought reasoning can be a valuable ally in the battle for improved precision, reliability, and productivity.

What is Chain of Thought reasoning?

Chain of Thought (CoT) prompting is a technique that encourages an AI model to break down its logic into discrete, sequential steps rather than leap to an answer. As IBM describes it, CoT enables a model to simulate “thinking out loud” — walking through the reasoning path one step at a time.

This stepwise reasoning brings three core advantages:

- Accuracy in complex scenarios — especially when multiple inference steps are needed.

- Transparency — the reasoning path can be exposed or audited.

- Self-checking — the model can detect inconsistencies or ambiguity before committing to a final answer.

Instead of acting like a black-box “smart search,” CoT nudges the assistant to think like someone evaluating multiple documents carefully — not hurrying to respond.

How does Modern Document Management apply CoT Reasoning?

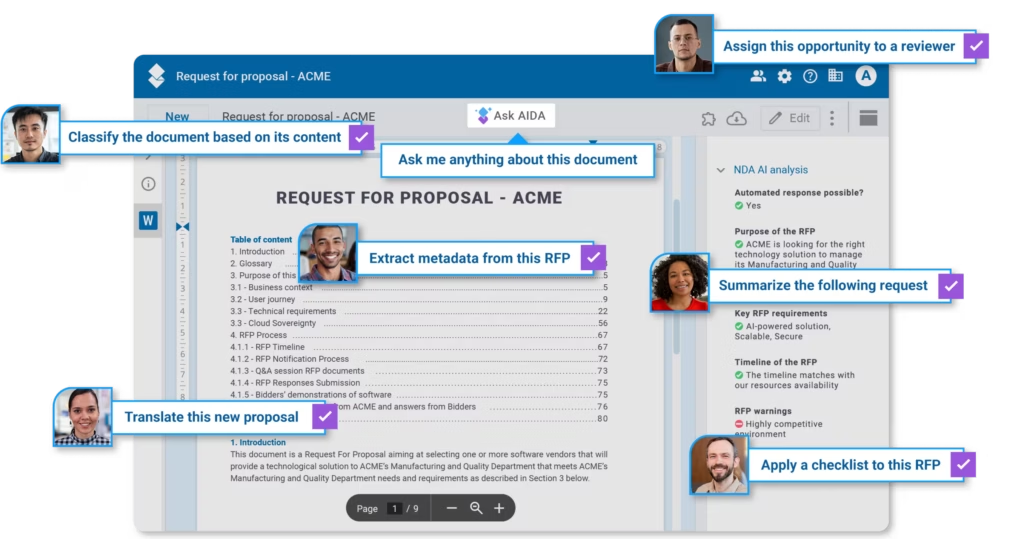

New generation Document Management Systems (DMS) such as AODocs embed Chain of Thought reasoning within AI agents and assistants to reduce source errors.

The process takes place in the following way:

- The AI agent extracts a set of documents relevant to the query (e.g., multiple “price list” files).

- Before answering, it looks for ambiguities: similar names, overlapping dates, and conflicting metadata.

- If ambiguity exists, it triggers a secondary analysis — for example, filtering to only validated documents, or sorting by date to pick the most recent approved version.

- Only once the best candidate is selected does it formulate the answer.

This extra reasoning loop mirrors how a human would act when confronted with multiple plausible documents: pause, compare, eliminate, then pick the best.

CoT reasoning gives the AI the ability to “pause and think” before it speaks. In doing so, it greatly reduces the risk of confident and incorrect AI responses due to outdated file versions of company files.

So what should IT leads consider when evaluating AI-powered DMS?

When you assess document management systems that claim they enable AI-driven processes, here are key points to probe:

- Does the document management system use metadata and version control as first-class input to ranking?

- Does it support multi-step reasoning or internal re-checks (i.e. chain of thought)?

- Can you audit or log the reasoning path when the system makes a decision?

- How does the system perform under ambiguity — e.g. multiple plausible sources — rather than in “clean” test cases?

Chain of thought reasoning isn’t a silver bullet. It adds compute cost and complexity.

But in environments with many document variants, it can mean the difference between AI that sounds smart and AI you can trust.

Find out more about AODocs AI:

aodocs.com/products/trusted-ai/

Dive deeper

The #1 Reason AI Agents Fail – and How to Fix It

See AODocs AI in action – get your demo now